Proactive chatbots set the stage for success. They reveal their capabilities upfront, guiding users towards successful outcomes, and are essential for effective chatbot design.

Within a typical chatbot, ther are four powerful LLM use cases – extraction, classification, transformation, and generation. Each of these have their own strengths and risks, and building an effective app will require all of them in some part.

Function calls are the unsung hero of LLM UI manipulations. While OpenAI has made great strides leveraging function calls to manipulate the UI in their demos, the rest of industry is yet to take its first meaningful steps. But what do those steps look like?

Navigating the complex decision of building versus buying a chatbot platform requires exploring strategic considerations, industry insights, and practical approaches. That is a fancy way of saying it is a tough decision!

From full creative freedom to strict fact matching, organizations can design chatbots that meet their specific risk tolerance and communication needs. The key is choosing an approach that serves the user while protecting the brand.

Ensuring proper communication is critical — don’t let a chatbot fail to chat! Bots must strike a balance between confirming user information and proceeding with a reasonable assumption of correctness. Explicit and implicit confirmations are the primary tools to achieve this balance.

Like any software project, building a chatbot requires careful planning. While all software projects fall along the Waterfall-Agile spectrum. I believe chatbot projects should lean closer to the Agile end, emphasizing rapid prototyping and iteration over extensive upfront planning. This post draws on my experience with numerous chatbot projects and outlines what successful teams have done at the start.

Most chatbots stick to one modality—either text or voice. But as someone who uses subtitles for everything, I wonder why voice bots don’t also include text for accessibility. Is it a limitation in the voice tech stack? Does text clutter the UI? To find out, I decided to build my own streaming-first chatbot interface with both text and voice.

How many developers does it take to build a chatbot? Would you be surprised if I told you that developers are not the most important role on a chatbot project team? In this post we go through the who to the what, where the what is a chatbot project and the who is you!

You never get a second chance to make a first impression. Chatbots are no different, and the first interaction with users sets the tone for the entire user experience. When crafting this initial message, I recommend to keep the 3 C’s in mind: Context, Capabilities, and Call to Action.

Context Context refers to who and where the bot is; it is the foundation upon which everything about the bot is built.

Two weeks ago, I found myself with a new desktop PC and a world of possibilities. And of that whole world of options, I chose NixOS. Not to brag, but I am a burgeoning script kiddie: a few months ago I made the switch from the default MacOS Terminal to Kitty, from VS Code to Neovim, and from Finder to lf. I can cat and grep and have a handful of zsh aliases, so installing NixOS shouldn’t be too hard, right?

The other day on Discord, I was trying to convince someone that they should not build a chatbot. Crazy, right?

But here’s the thing: Chatbots are an interface, just like buttons and tabs, and just like other interfaces, there are times when they do more harm than good. Just imagine if your phone’s settings app was a chatbot, and you had to type in a message or a talk to it just to enable or disable airplane mode?

When a big organization or government is looking at using an AI system, trust is often on their minds. There’s a lot of talk about AI hallucination and lies- like this NY Times article saying GPT-4 hallucinates 3% of the time or this paper that shows GPT-4 can engage in insider trading and then lie about it. How can folks who work in AI build systems that big clients can trust?

With a review like that, how can you not be interested? If you’re looking for memes about AI Engineering, working with Large Language Models, or the recent AI Engineer Summit, read on!

The matchup we have all been waiting for! A fine-tuned upstart underdog against the reigning champion heavyweight LLM! Will our tiny model prevail?

While my model is training, let’s take a moment to pause and reflect on the process so far, its thorns and roses, and make a few more Bert puns while we’re at it!

You can’t have RAG without a retriever, but what exactly is a retriever? And what kind of retriever am I going to use? This post will take a trip with BERT, and talk about some recent innovations with the model. Let’s jump in!

Parts of a retriever All retrievers have at least three things in common: 1. A corpus of information is stored somewhere, somehow 2. When presented with an incoming query, they do some stuff to process it 3.

OK, here’s my question:

Why do all these RAG apps use giant f**king models?? Default Langchain RAG tutorial - ChatGPT 3.5 Turbo (175B param) Random RAG tutorial from Google - ChatGPT 3.5 Turbo (175B param) Another tutorial - Llama 2 (70B param) There are more, but you get the point. I’m worked up about this because these foundation models- chatGPT, Claude, Bard, the whole lot- are so freaking powerful that using them for information retrieval is super inefficient.

Holy shit its done!! Check it out on Github here

Getting to this point was a ton of work and I am so glad that it’s at point of minimum viability for release. In this post I want to go through some of the struggles, the triumphs, and what is next for the project.

🪦 The Struggles 🪦 A few things were much, much more difficult than I anticipated. In no particular order, they were:

A big reason why I am researching AI Agents so much is I want to try my hand at building a software development agent of my own named Taylor. The overall goal is for Taylor to be a fully autonomous AI-powered junior developer who can be a genuine asset on a development team. I want to take this idea as far as I can on my own, and use the finished product in future projects to augment my own capabilities.

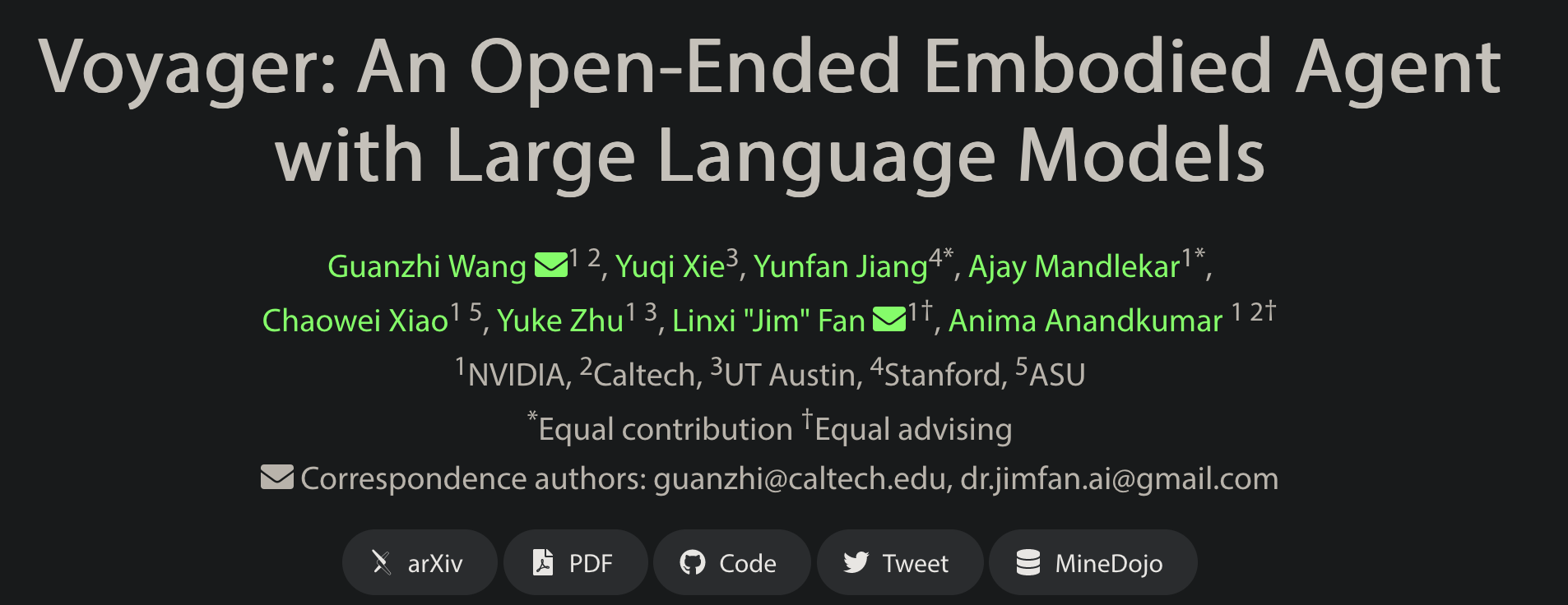

VOYAGER from NVidia shows a novel way of using GPT-4 to create an agent that plays minecraft via API. Their approach is very agentic and mirrors other development agents like gpt-engineer and smol-developer. In this post, we’re going to break down Voyager’s action loop and analyze the fine details of how this agent works.

Many autonomous agents can complete two or three tasks in a row, but VOYAGER from a team led by NVIDIA, showed ability to consistently perform over 150.

With all the hype about “AI automating your job away,” there is a lot of talk about the “what” but not the “how.” Agents are today’s best shot at getting an AI to do useful work for you, and in this series I want to go over the latest advances in the open-source AI agents space, domain by domain.

What are AI Agents? Simply put, an AI agent is a foundational model (like GPT 4 or Llama 2) that has been specially prompted with a task to complete, a set of human-designed tools to complete it with, and can execute prompts multiple times to generate a solution.